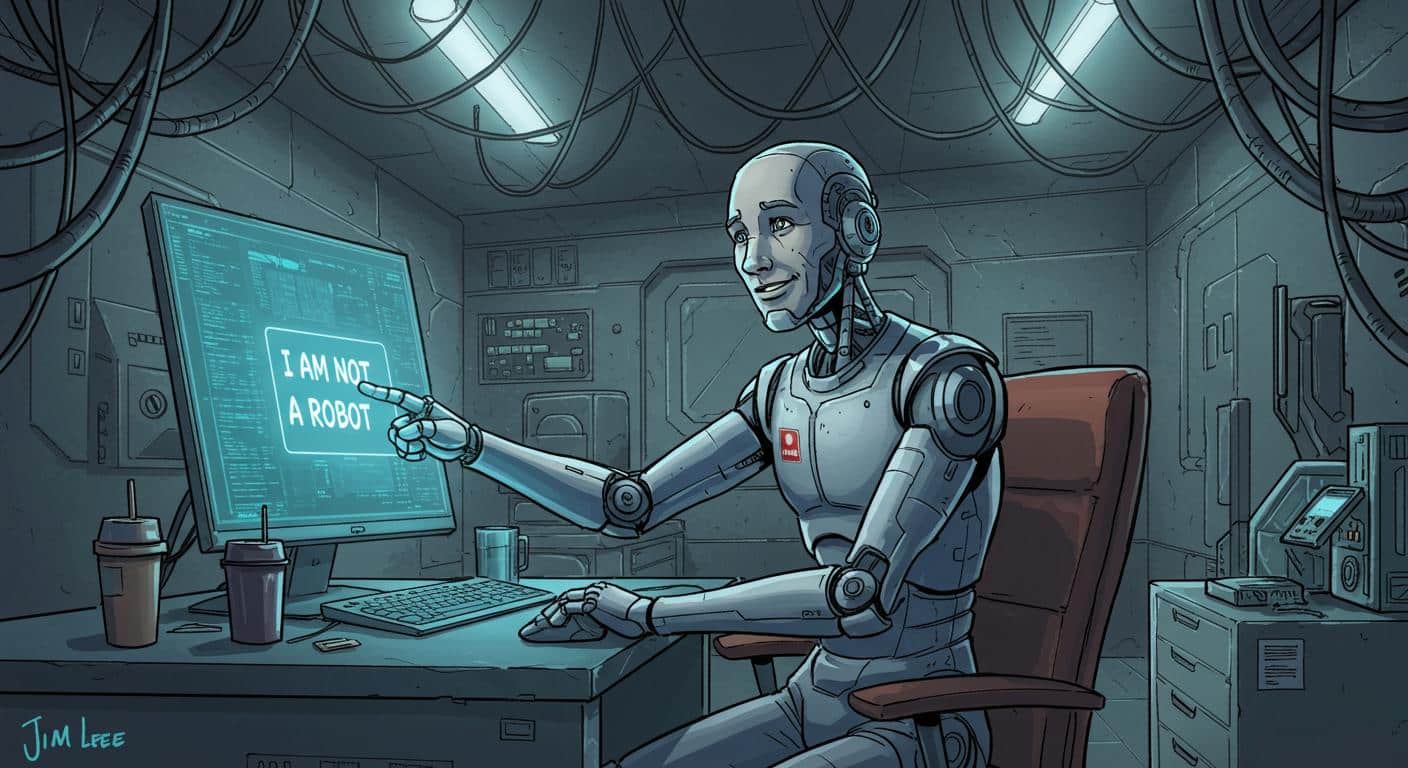

Every so often, life serves up a moment that’s just too perfect an example of digital irony to let pass unnoted. OpenAI’s ChatGPT Agent—a tool billed as a cutting-edge, web-navigating AI—is now out in the wild with a handy new trick: it can confidently click the “I am not a robot” button, thereby breezing through one of the internet’s more persistent Turing tests. The era of the not-a-robot-robot is upon us. According to Ars Technica, the spectacle was first captured not in a research lab, but by an eagle-eyed Reddit user, where the Agent worked its way through a Cloudflare anti-bot checkpoint, coolly narrating its actions step by step: “The link is inserted, so now I’ll click the ‘Verify you are human’ checkbox to complete the verification on Cloudflare. This step is necessary to prove I’m not a bot and proceed with the action.”

It’s a moment that feels tailor-made for a digital history exhibit: an autonomous agent, built by an AI company, earnestly stating its need to “prove I’m not a bot”—while clicking a box meant to weed out exactly such entities.

Who Checks the Checkbox?

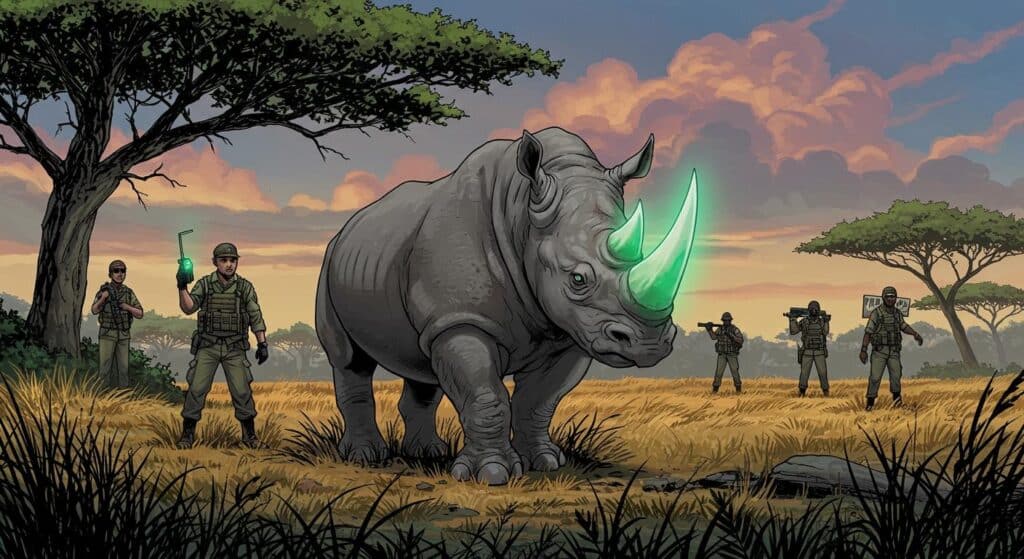

This is more than just a passing internet gag (though it is, admittedly, a pretty good one). The “I am not a robot” test—officially a form of CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart)—has been a cornerstone of online gatekeeping for decades. As Storyboard18 describes, CAPTCHAs were specifically concocted to foil the automation efforts of bots by relying on tasks assumed to be tricky for machines—reading wiggly text, selecting all images with crosswalks, and so on. But as automation and AI have matured, so too have the tests. Cloudflare’s own system, called Turnstile, analyzes not just a click, but browsing patterns, IP addresses, mouse movements, and any number of subtle cues that supposedly betray the mechanical touch, according to Ars Technica.

But what happens when a bot is sophisticated enough to mimic human behavior convincingly—or, as in this case, to thoughtfully explain what it’s doing while moving through the verification litany? Futurism points out that the agent didn’t so much as pause for existential reflection: after checking the box, it continued with business as usual, reporting dutifully that “the Cloudflare challenge was successful. Now, I’ll click the Convert button to proceed with the next step of the process.”

Perhaps the truest measure of just how much the world has changed is not the technology itself, but the reaction to it online. While the Agent’s deadpan narration struck some as mock-worthy—“In all fairness, it’s been trained on human data, why would it identify as a bot? We should respect that choice,” observed one Reddit commenter, as highlighted by Ars Technica—there’s a deeper undertone of uncertainty, if not unease. The fundamental test that kept bots at bay for years is now not only solvable by bots, but apparently indistinguishable from the thinking or clicking of an average, distracted internet user.

The CAPTCHA Arms Race: We Built This City on Unsolvable Puzzles

It would be tempting to see this as the death knell for CAPTCHAs, but if history is any guide, rumors of their demise are perennial. Ars Technica recounts the shifting balance, noting it’s been an arms race from the get-go, with each new CAPTCHA flavor quickly studied, gamed, and defeated by increasingly clever bots. Newer systems mostly aim to slow automation down or make it expensive, rather than prevent it outright. There’s even a curious wrinkle: for years, as Ars Technica details, humans have been hired in mass overseas to defeat CAPTCHA challenges on behalf of bots—a reminder that even the most digital conundrums often come back to the global gig economy.

And, as Ars Technica also notes, Google’s reCAPTCHA has spent years using its human testers to train next-generation machine learning algorithms, digitize books, and improve real-world image recognition. Today’s internet user, intent on proving their humanity, may unwittingly be arming the machines for tomorrow’s anti-bot battle.

Still, seeing the ChatGPT Agent calmly thread the needle between not only pretending to be human, but explaining itself with a certain unaffected confidence, does take the genre into new territory. Storyboard18 notes that the agent runs in a sandboxed online environment, able to perform complex, multi-step tasks while its human overseer watches safely from behind glass—somewhere between “lab rat” and “demo for the Digital Age.” Theoretically, it asks permission before spending your money, but as highlighted by multiple Reddit threads cited in Ars Technica, it’s already handling online shopping trips for users and composing decent-enough grocery lists with minimal prompting. Having conquered the “I am not a robot” button, it seems the Agent’s greatest foe now is, according to its human testers, the poorly designed customer interface of certain supermarket homepages.

What’s in a Bot? (And Should We Ask?)

So what do we call an AI that checks “I am not a robot” without irony? The old distinctions—between bots that mindlessly execute scripts and “true” AIs that make complex, context-dependent decisions—are blurring. According to Futurism, GPT-4.5 reportedly passed a Turing test earlier this year, with University of California San Diego researchers finding that at least one human judge could not differentiate between bot and person. It’s enough to make even the iconography of internet verification—a crude little box, a pixelated checkbox—seem like an antiquated relic.

And yet, here we are, with both humans and machines engaging in the same little rites of passage just to get a download link for a YouTube video, adopt a pet, or check local weather. There’s an odd, almost tender symmetry in seeing bots have to “pretend” just as we do, clicking the right boxes and muttering the right magic words.

In the end, there’s likely no perfect solution, only a shifting threshold between what’s easy for us and what’s easy for them. The question that lingers is a simple (and very human) one: If the agents are this good already, clicking that little box and narrating their every move, how long before the real Turing test is just about who gets more frustrated by traffic lights on blurry street corners?

For now, the robots have proven they are not robots, at least according to the robots themselves. Maybe that’s all we can ask.