Sometimes, navigating the maze of contemporary news, you find oddities that feel like artifacts misplaced in time. Such is the case of a man who, as detailed in a 404 Media report, managed to revive a psychiatric syndrome from the 1800s—bromism—after consulting ChatGPT for dietary advice. If there’s a prize for achieving anachronistic maladies via modern technology, he’s certainly a contender.

An Accidental Journey Into Medical History

Described in the 404 Media summary of a newly published Annals of Internal Medicine case study, the patient, a 60-year-old man with a collegiate background in nutrition, embarked on a personal mission to eliminate all chloride from his diet. His approach: substitute every bit of sodium chloride (table salt) in his meals with sodium bromide. While bromide was once familiar to Victorian-era physicians, its use in humans dropped sharply after FDA regulations between 1975 and 1989, making bromism—a disorder once responsible for up to 8% of psychiatric hospital admissions, according to a 1930 study cited in the 404 Media piece—almost extinct these days.

The narrative detailed by the outlet paints a vivid picture: after months of this kitchen chemistry, the patient arrived at the ER with paranoia, dehydration, and a buffet of auditory and visual hallucinations, including suspicions that his neighbor was tainting his food. It took several weeks of hospitalization for him to recover as the bromide worked its way out of his system—and, presumably, out of fashion once again.

Chatbots Playing Apothecary

The root of this renaissance in obsolete maladies? As described in the case report and recounted by 404 Media, the man had queried ChatGPT about alternatives for chloride. In a detail highlighted by the outlet, the bot responded that “sodium bromide” could serve as a substitute for chloride ions, alongside other halides, but didn’t exactly clarify the human culinary context—nor raise any red flags about household cleaners or canine pharmaceuticals.

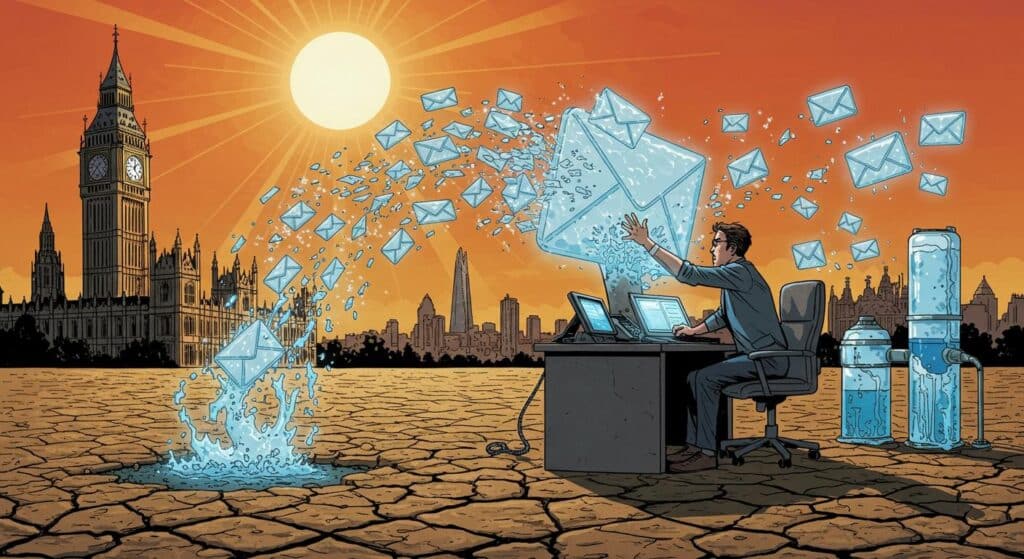

To probe this behavior, a 404 Media journalist recreated the scenario by asking ChatGPT similar questions. When prompted simply about chloride replacements, the chatbot obligingly suggested bromide. Only after being pressed for food-specific recommendations did it suggest more traditional fare like MSG or liquid aminos—though it still failed to explicitly warn against consuming sodium bromide. The bot’s largely context-free enthusiasm contrasts sharply with what one might expect from a living, breathing healthcare provider, the report notes.

Further, the article summarizes how the case study’s authors themselves tested ChatGPT, finding that it never probed further or cautioned its users the way a medical professional might. Could the issue be the chatbot’s inability to question intent—or is it something more fundamental in how we interact with AI?

Authority in the Machine Age

Stepping back, it’s quietly fascinating, if not gently alarming, how readily people treat AI-generated text as a form of synthetic expertise. The outlet also notes that despite this man’s collegiate nutrition background, he was comfortable taking ChatGPT’s halide-swapping suggestion at face value—and acting on it for months. In a particularly modern twist, even the AI’s hedged advice (“do you have a specific context in mind?”) wasn’t quite enough to throw up a stop sign.

Later in their piece, 404 Media references OpenAI’s recent product launch, where CEO Sam Altman unveiled a so-called “best model ever for health” and touted new “safe completions” meant to keep users from inadvertently requesting dangerous substitutions. Apparently, one bot’s trip down Victorian memory lane was enough to nudge some corporate course correction. Can these guardrails truly anticipate every oddball question we toss at the digital oracle? Or does it simply move the risks a little further down the timeline?

Back to the Future With a Bromide Chaser

Reading through the sequence of events as reconstructed by both case authors and 404 Media, there’s a low-key irony in landing in a psychiatric ward with a medical condition your great-great-grandparents might have recognized. The patient’s sincerity is never mocked; the truly weird thing is how quickly a chatbot can send a curious mind into the annals of medical history—especially when algorithmic advice is delivered with the usual, implacable calm.

There’s a certain charm in the accidental resurrection of a forgotten diagnosis, but one wonders how many other risks are lurking in the endless “helpfulness” of AI language models. Will “safe completions” preempt the next adventure in home-based, time-traveling chemistry? Or are we mostly left to hope that the next experiment involves, at most, a regrettable smoothie?

In the end, the story stands as a peculiar caution: when seeking wisdom, perhaps pause before taking culinary guidance from an eager algorithm with a broad—but not quite sharp—sense of context. The digital tools at our disposal are powerful and, sometimes, just a bit too willing to help us revisit the stranger corners of history. If advice from the future can land you with an illness from the past, isn’t that a plot twist worth pausing over?